在 LXC 中运行 Headless Steam 实现在家里随便一台电脑上,用服务器的显卡玩游戏。最终的效果就是,在 Steam 上面,从家里的服务器 stream 游戏到另一台电脑,手机,或者 Shield 上面。同时,这个服务器上面的显卡还要保留跑 AI 的能力。

Context

之前的文章已经提到了如何在 LXC 中使用 Nvidia GPU device。既然手上有一张 3090,那必然是既要用它玩游戏,也要用它跑跑 AI 的。

相关文章:

虽然在在之前的文章中提到过不建议在 LXC 里面跑 Docker,但是为了能够复用 消费级的 GPU,在LXC 上面跑 Docker 基本上是跑不掉了。所以这里就建议 Proxmox 的 LXC 跑在 EXT4 这种简单的 FS 上面,如果是 ZFS,那大概需要 NVME 才能运行良好了。

这种折腾从某个角度来看是完全没有必要的。如果就是在一个 Ubuntu box 上面跑多个 Container,既有 Ollama,也有 Steamheadless docker,这完全不足为奇。这么折腾的原因仅仅是因为想要保持不同 Application 之间的“独立性”,保持 Proxmox 上面针对每个 GPU Application 的 Snapshot 和 Backup/Recovery 的能力。如果我们只是进行 GPU Passthrough (这篇文章),那么自然可以使用单独一个 VM 跑多个 Docker。但是这样就限制了使用 Proxmox 来单独对于每个 GPU Application 管理的的能力,而且也导致未来所有的 GPU 使用都要在这台 VM 上面进行,这种限制有时候还是挺麻烦的。

另外,如果想真的玩好,还是强烈建议给这个 GPU 接上显示器,或者接上一个 Dummy Display Plug。否则的话,Steam-headless 里面需要模拟一个 Display 设备,这样会导致一些设置不可用,而且极大(非常大)限制 GPU 性能的发挥。Amazon 上面搜 “display port dummy plug” 就可以了。

Set up Steam-Headless

这个实际上已经有现成的了。这次的远程玩游戏的功能就是由这个 project 提供的。

然后这篇.md文档还是要好好看看的,这里面的各种 Directory 呀,还是建议老老实实的按照文档都创建好,毕竟这个 Docker Container 的复杂度确实比较高。另外,很多相关的设置都在这个.env文件里面,需要仔细阅读。

最终跑通的版本是这样的:

- Docker compose 选择的是 non-privileged 的版本。

- 选择了逐一map每个GPU 用到的 devices: /dev/nvidia*。按理说安装了 container toolkit 应该可以省略这一步,但是实践发现还是逐个 map 能跑通。

- 特别注意这里的所有 devices,这些都是在 LXC config 里面需要特殊照顾的。

version: "3.8"

services:

steam-headless:

image: josh5/steam-headless:latest

restart: unless-stopped

shm_size: ${SHM_SIZE}

ipc: host # Could also be set to 'shareable'

ulimits:

nofile:

soft: 1024

hard: 524288

cap_add:

- NET_ADMIN

- SYS_ADMIN

- SYS_NICE

security_opt:

- seccomp:unconfined

- apparmor:unconfined

# GPU PASSTHROUGH

deploy:

resources:

reservations:

# Enable support for NVIDIA GPUs.

#

# Ref: https://docs.docker.com/compose/gpu-support/#enabling-gpu-access-to-service-containers

devices:

- capabilities: [gpu]

device_ids: ["${NVIDIA_VISIBLE_DEVICES}"]

# NETWORK:

## NOTE: With this configuration, if we do not use the host network, then physical device input

## is not possible and your USB connected controllers will not work in steam games.

network_mode: host

hostname: ${NAME}

extra_hosts:

- "${NAME}:127.0.0.1"

# ENVIRONMENT:

## Read all config variables from the .env file

environment:

# System

- TZ=${TZ}

- USER_LOCALES=${USER_LOCALES}

- DISPLAY=${DISPLAY}

# User

- PUID=${PUID}

- PGID=${PGID}

- UMASK=${UMASK}

- USER_PASSWORD=${USER_PASSWORD}

# Mode

- MODE=${MODE}

# Web UI

- WEB_UI_MODE=${WEB_UI_MODE}

- ENABLE_VNC_AUDIO=${ENABLE_VNC_AUDIO}

- PORT_NOVNC_WEB=${PORT_NOVNC_WEB}

- NEKO_NAT1TO1=${NEKO_NAT1TO1}

# Steam

- ENABLE_STEAM=${ENABLE_STEAM}

- STEAM_ARGS=${STEAM_ARGS}

# Sunshine

- ENABLE_SUNSHINE=${ENABLE_SUNSHINE}

- SUNSHINE_USER=${SUNSHINE_USER}

- SUNSHINE_PASS=${SUNSHINE_PASS}

# Xorg

- ENABLE_EVDEV_INPUTS=${ENABLE_EVDEV_INPUTS}

- FORCE_X11_DUMMY_CONFIG=${FORCE_X11_DUMMY_CONFIG}

# Nvidia specific config

- NVIDIA_DRIVER_CAPABILITIES=${NVIDIA_DRIVER_CAPABILITIES}

- NVIDIA_VISIBLE_DEVICES=${NVIDIA_VISIBLE_DEVICES}

- NVIDIA_DRIVER_VERSION=${NVIDIA_DRIVER_VERSION}

# DEVICES:

devices:

# Use the host fuse device [REQUIRED].

- /dev/fuse

# Add the host uinput device [REQUIRED].

- /dev/uinput

# Add NVIDIA HW accelerated devices [OPTIONAL].

# NOTE: If you use the nvidia container toolkit, this is not needed.

# Installing the nvidia container toolkit is the recommended method for running this container

- /dev/nvidia0

- /dev/nvidiactl

- /dev/nvidia-modeset

- /dev/nvidia-uvm

- /dev/nvidia-uvm-tools

- /dev/nvidia-caps/nvidia-cap1

- /dev/nvidia-caps/nvidia-cap2

# Ensure container access to devices 13:*

device_cgroup_rules:

- 'c 13:* rmw'

# VOLUMES:

volumes:

# The location of your home directory.

- ${HOME_DIR}/:/home/default/:rw

# The location where all games should be installed.

# This path needs to be set as a library path in Steam after logging in.

# Otherwise, Steam will store games in the home directory above.

- ${GAMES_DIR}/:/mnt/games/:rw

# The Xorg socket.

- ${SHARED_SOCKETS_DIR}/.X11-unix/:/tmp/.X11-unix/:rw

# Pulse audio socket.

- ${SHARED_SOCKETS_DIR}/pulse/:/tmp/pulse/:rw- .env 文件, web UI 选择 VNC (Neko 跑不通)

FORCE_X11_DUMMY_CONFIGset to false,因为已经用上了 dummy plug,否则如果没有显示器就需要选择 true;NVIDIA_DRIVER_CAPABILITIES选择的是 all,试验过 graphics,似乎性能损失很大。有 dummy plug 或者显示器的情况下,而且 LXC 的 device mapping 也干脆都拿过来的情况下,还是直接用 all 比较靠谱。- PUID 和 PGID 都是1000

.envfile# ____ _ # / ___| _ _ ___| |_ ___ _ __ ___ # \___ \| | | / __| __/ _ \ '_ ` _ \ # ___) | |_| \__ \ || __/ | | | | | # |____/ \__, |___/\__\___|_| |_| |_| # |___/ # NAME=SteamHeadless TZ=America/Vancouver USER_LOCALES=en_US.UTF-8 UTF-8 DISPLAY=:55 SHM_SIZE=2G ## HOME_DIR: ## Description: The path to the home directory on your host. Mounts to `/home/default` inside the container. HOME_DIR=/opt/container-data/steam-headless/home ## SHARED_SOCKETS_DIR: ## Description: Shared sockets such as pulse audio and X11. SHARED_SOCKETS_DIR=/opt/container-data/steam-headless/sockets ## GAMES_DIR: ## Description: The path to the games directory on your host. Mounts to `/mnt/games` inside the container. GAMES_DIR=/mnt/games # ____ __ _ _ _ _ # | _ \ ___ / _| __ _ _ _| | |_ | | | |___ ___ _ __ # | | | |/ _ \ |_ / _` | | | | | __| | | | / __|/ _ \ '__| # | |_| | __/ _| (_| | |_| | | |_ | |_| \__ \ __/ | # |____/ \___|_| \__,_|\__,_|_|\__| \___/|___/\___|_| # # PUID=1000 PGID=1000 UMASK=000 USER_PASSWORD=changeme! # __ __ _ # | \/ | ___ __| | ___ # | |\/| |/ _ \ / _` |/ _ \ # | | | | (_) | (_| | __/ # |_| |_|\___/ \__,_|\___| # # ## MODE: ## Options: ['primary', 'secondary'] ## Description: Steam Headless containers can run in a secondary mode that will only start ## a Steam process that will then use the X server of either the host or another ## Steam Headless container running in 'primary' mode. MODE=primary # ____ _ # / ___| ___ _ ____ _(_) ___ ___ ___ # \___ \ / _ \ '__\ \ / / |/ __/ _ \/ __| # ___) | __/ | \ V /| | (_| __/\__ \ # |____/ \___|_| \_/ |_|\___\___||___/ # # # Web UI ## WEB_UI_MODE: ## Options: ['vnc', 'neko', 'none'] ## Description: Configures the WebUI to use for accessing the virtual desktop. ## Supported Modes: ['primary'] WEB_UI_MODE=vnc ## ENABLE_VNC_AUDIO: ## Options: ['true', 'false'] ## Description: Enables audio over for the VNC Web UI if 'WEB_UI_MODE' is set to 'vnc'. ENABLE_VNC_AUDIO=true ## PORT_NOVNC_WEB: ## Description: Configure the port to use for the WebUI. PORT_NOVNC_WEB=8083 ## NEKO_NAT1TO1: ## Description: Configure nat1to1 for the neko WebUI if it is enabled by setting 'WEB_UI_MODE' to 'neko'. ## This will need to be the IP address of the host. NEKO_NAT1TO1=10.0.2.71 # Steam ## ENABLE_STEAM: ## Options: ['true', 'false'] ## Description: Enable Steam to run on start. This will also cause steam to restart automatically if closed. ## Supported Modes: ['primary', 'secondary'] ENABLE_STEAM=true ## STEAM_ARGS: ## Description: Additional steam execution arguments. STEAM_ARGS=-silent # Sunshine ## ENABLE_SUNSHINE: ## Options: ['true', 'false'] ## Description: Enable Sunshine streaming service. ## Supported Modes: ['primary'] ENABLE_SUNSHINE=false ## SUNSHINE_USER: ## Description: Set the Sunshine service username. SUNSHINE_USER=admin ## SUNSHINE_PASS: ## Description: Set the Sunshine service password. SUNSHINE_PASS=admin # Xorg ## ENABLE_EVDEV_INPUTS: ## Available Options: ['true', 'false'] ## Description: Enable Keyboard and Mouse Passthrough. This will configure the Xorg server to catch all ## evdev events for Keyboard, Mouse, etc. ## Supported Modes: ['primary'] ENABLE_EVDEV_INPUTS=true ## FORCE_X11_DUMMY_CONFIG: ## Available Options: ['true', 'false'] ## Description: Forces the installation of xorg.dummy.conf. This should be used when your output device does not have a monitor connected. ## Supported Modes: ['primary'] FORCE_X11_DUMMY_CONFIG=false # Nvidia specific config (not required for non Nvidia GPUs) ## NVIDIA_DRIVER_CAPABILITIES: ## Options: ['all', 'compute', 'compat32', 'graphics', 'utility', 'video', 'display'] ## Description: Controls which driver libraries/binaries will be mounted inside the container. ## Supported Modes: ['primary', 'secondary'] NVIDIA_DRIVER_CAPABILITIES=all ## NVIDIA_DRIVER_CAPABILITIES: ## Available Options: ['all', 'none', '<GPU UUID>'] ## Description: Controls which GPUs will be made accessible inside the container. ## Supported Modes: ['primary', 'secondary'] NVIDIA_VISIBLE_DEVICES=all ## NVIDIA_DRIVER_VERSION: ## Description: Specify a driver version to force installation. ## Not meant to be used if nvidia container toolkit is installed. ## Detect current host driver installed with `nvidia-smi 2> /dev/null | grep NVIDIA-SMI | cut -d ' ' -f3` ## Supported Modes: ['primary', 'secondary'] NVIDIA_DRIVER_VERSION=

- 按照说明,在 LXC 下面把 /opt/container-services/steam-headless 和 /opt/container-data/steam-headless/ 都创建好。并且

chown -R 1000:1000. - 把 docker-compose.yaml 和 .env 文件都放在 /opt/container-services/steam-headless/

docker compose up→ check log,看看是不是有 error,是不是缺少了什么 device,或者 fuse 有问题,或者 AppArmor 有问题。一切跑通之后就用docker compose up -d即可。

Set up LXC

最精髓的地方实际上在这里:

- LXC 必须是 privileged。否则没办法跑 Docker。

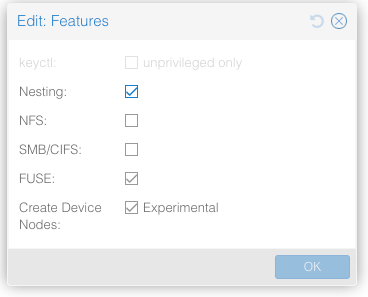

- LXC Options 里面必须要把

Features里面的 Fuse, mknode, nesting 都打开。

- /etc/pve/lxc/###.conf 的内容如下:

arch: amd64

cores: 12

features: fuse=1,mknod=1,nesting=1

hostname: testbox

memory: 16384

net0: name=eth0,bridge=vmbr2,firewall=1,hwaddr=XX:XX:XX:XX:XX:XX,ip=dhcp,type=veth

ostype: ubuntu

rootfs: vmpool:subvol-201-disk-0,mountoptions=noatime,size=96G

swap: 0

lxc.cgroup2.devices.allow: c 195:* rwm

lxc.cgroup2.devices.allow: c 507:* rwm

lxc.cgroup2.devices.allow: c 239:* rwm

lxc.mount.entry: /dev/nvidia-caps/nvidia-cap1 dev/nvidia-caps/nvidia-cap1 none bind,optional,create=file

lxc.mount.entry: /dev/nvidia-caps/nvidia-cap2 dev/nvidia-caps/nvidia-cap2 none bind,optional,create=file

lxc.mount.entry: /dev/nvidia0 dev/nvidia0 none bind,optional,create=file

lxc.mount.entry: /dev/nvidiactl dev/nvidiactl none bind,optional,create=file

lxc.mount.entry: /dev/nvidia-modeset dev/nvidia-modeset none bind,optional,create=file

lxc.mount.entry: /dev/nvidia-uvm dev/nvidia-uvm none bind,optional,create=file

lxc.mount.entry: /dev/nvidia-uvm-tools dev/nvidia-uvm-tools none bind,optional,create=file

lxc.cgroup2.devices.allow: c 10:223 rwm

lxc.mount.entry: /dev/uinput dev/uinput none bind,optional,create=file

- 其中 Nvidia 相关的东西很多,为了方便,通通 map 到 LXC 里面。

- /dev/uinput 也是要映射进去的。

- lxc.cgroup2.devices.allow 后面的数字都是 device identifier number (major number),跟

ls -al /dev/nvidia*里面给出来的保持一致。这些设置基本上在之前的文章中已经提到过了。239 是/dev/nvidia-caps/nvidia-cap1|2的 major number,因为 cap1 和 cap2 也 map 进去了,所以干脆一并把 permission 加上。另外 /dev/uinput 的 major number 是 10 (ls -al /dev/uinput),所以也在后面加上了。 - (其实这里并不是 很确定是不是需要这么多 permission,尤其是 caps 上面,也许并不需要给 permission。)

其他的关于 Nvidia Driver 方面,就跟之前的文章一样,需要保证 Proxmox 主机上面有 GPU Driver,同时 LXC 里面有一个 kernel-less driver,并且是同版本的。判定方法还是 nvidia-smi: [set-up-a-new-proxmox-server]

如此一来,在 Docker compose 文件中提到的那些 Nvidia 的设备,就都在 LXC 里面映射完毕了。理论上Steam headless 就应该可以运行了。

Results

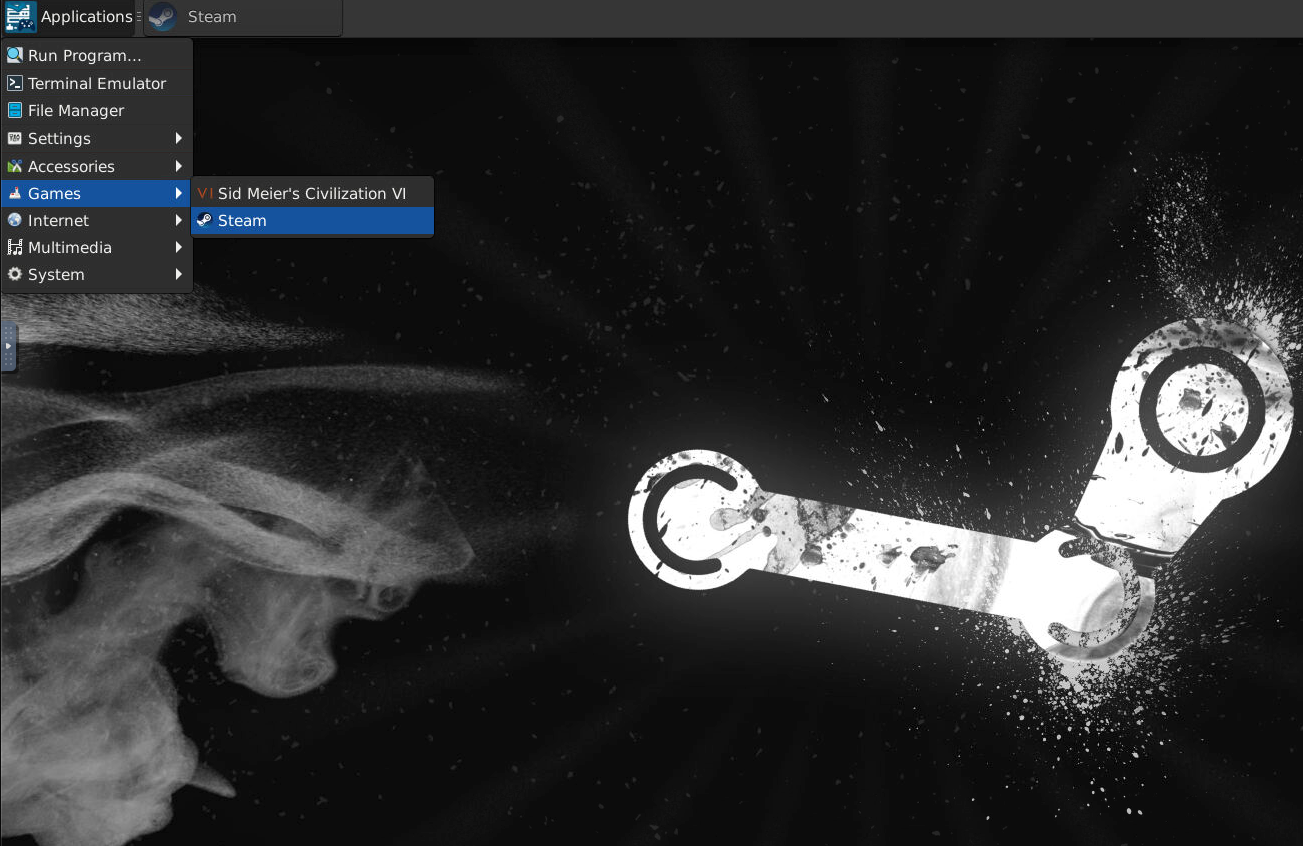

访问 http://<your_lxc_ip>:8083/web/ 应当就可以进入 steam-headless 的 web UI 了。这里面实际上运行了一个 Linux,需要我们打开已经预装的 Steam,下载游戏,设置好 Proton 最为兼容层,就可以了。

![start-page]](../../assets/posts/2024/headless-steam-in-lxc-with-gpu-image-1.png)

找到 Steam,然后登陆,下载,搞定兼容层设置,开启 stream,设置配对等等。

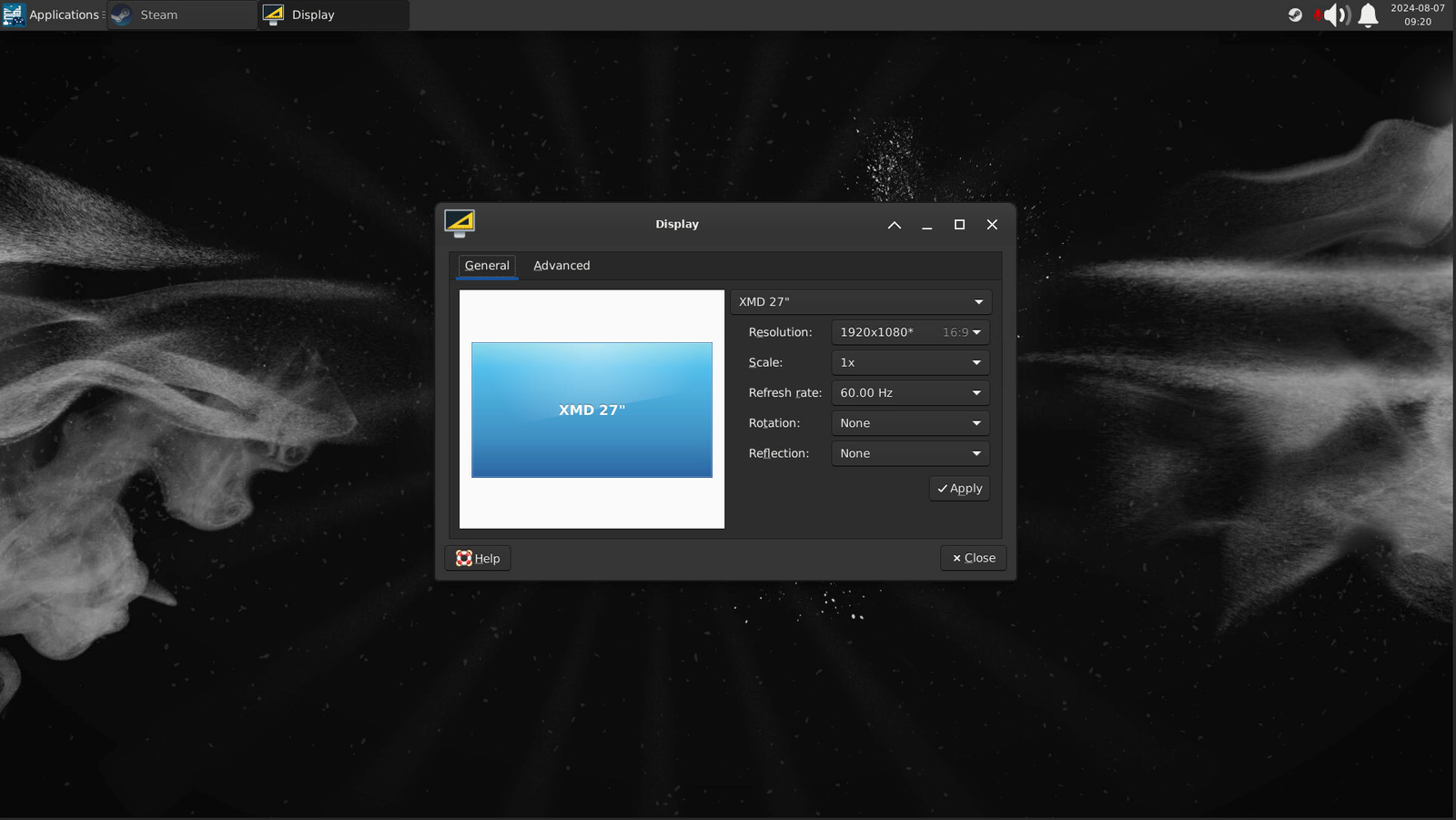

分辨率大概率还是要调一下的。这里为了Web UI 访问方便就只用了1080p。要求不高的话,玩游戏其实也OK了。

此时如果这台 LXC 在局域网里面,在另一台电脑上应该就已经可以开始 stream 游戏了。